In late 2011, Google announced an effort to make search behavior more secure. Logged-in users were switched to using httpS from http. This encrypted their search queries from any prying eyes, and kept from being passed on to websites the users visits after seeing search results.

This led to the problem we, Marketers, SEOs, Analysts, fondly refer to as not provided .

Following revelations of NSA activities via Mr. Snowden, Google has now switched almost all users to secure search, resulting in even more user search queries showing up as not provided in all web analytics tools.

Yahoo! has

recently announced switching to httpS as standard for all mail users, indicating secure search might follow next. That of course will mean more referring keyword data will disappear.

At the moment it is not clear whether Bing, Baidu, Yandex and others will move to similarly protect users’ search privacy; if and when they do, the result will be loss of even more keyword-level user behavior data.

Initially, I was a little conflicted about the whole not provided affair.

As an analyst, I was upset that this change would hurt my ability to analyze the effectiveness of my beloved search engine optimization (SEO) efforts – which are really all about finding the right users using optimal content strategies.

But it is difficult to not look at the wider picture. Repressive (and some not-overtly repressive) regimes around the world aggressively monitor user search behavior (and more). This can place many of our peer citizens in grave danger. As a citizen of the world, I was happy that Google and Yahoo! want to protect user privacy.

I'm a lot less conflicted now. I've gone through the five stages in the

Kubler-Ross model. Besides, I've also come to realize that there is a lot I can still do!

In this post I want to share four angles on secure search:

While not provided is not an optimal scenario, you'll see that things are not as bad as initial impressions might indicate, yes there are new challenges, but we also have some alternative solutions, and realize that the SEO industry is not done innovating. Ready?

1. Implications of Secure Search Decision.

No keyword data in analytics tools.

We are headed towards having zero referring keywords from Google and, perhaps, other search engines.

This impacts all digital analytics tools, regardless of what company and whether they use javascript or log files or magic beans to collect data.

Depending on the mobile device and browser you are using (for example, Safari since iOS 6), you have already been using secure search for a while regardless of the search engine you use. So that data has been missing for some time.

There are a number of "hacks" out there with promises of getting close enough keyword data, or for marryingnot provided with some of the remaining data and landing pages. These are well meaning, but almost always yield zero value or worse drive you in a sub-optimal direction. Please been careful if you choose to use them.

No keyword data in competitive intelligence/SEO tools.

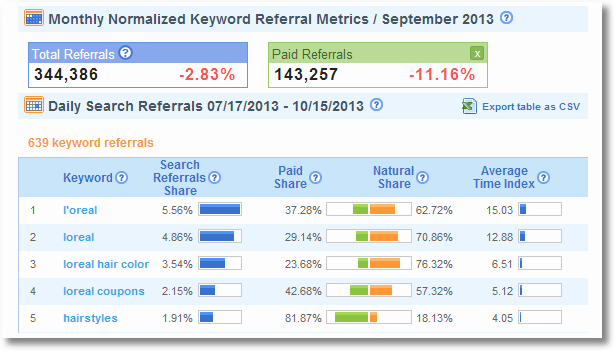

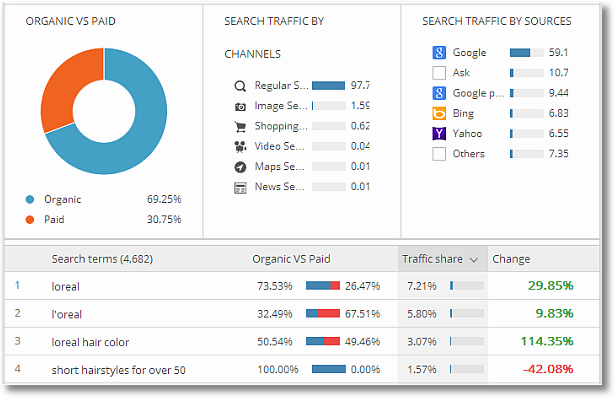

Perhaps you (like me) use competitive intelligence or SEO tools to monitor keyword performance. For example, for L'Oreal:

Secure Search will also impact data in these tools. It will be increasingly distorted because it will reflect only traffic from the small audience of visitors who are not yet using secure search or are using other non-secure search engines or only the type of people who allow their behavior to be 100% monitored – including SSL/httpS. Sample and sampling bias.

I really loved having this data. It was such a great way to see what competitors were doing or where I was beating them on paid or organic or brand or category terms. Sadly, it does not matter which tool you use. These tools will only show you a more distorted view of reality. Please be very careful about what you do with keyword data from these tools (though they provide a lot of other data, all of which was of the same quality as in the past).

[sidebar]

These changes impact my AdWords spend sub-optimally. A lot of the keywords I used to add to my campaigns came from the long, long tail I saw in my organic search data (I would take the best performers there and use PPC to get more traffic) and from competitive intelligence research. With both of these sources gone, my AdWords spend may take a dive because I can't find these surprising keywords — even using the tools you'll see me mention below! How is this in Google's interest?

[/sidebar]

No keyword-level conversion analysis.

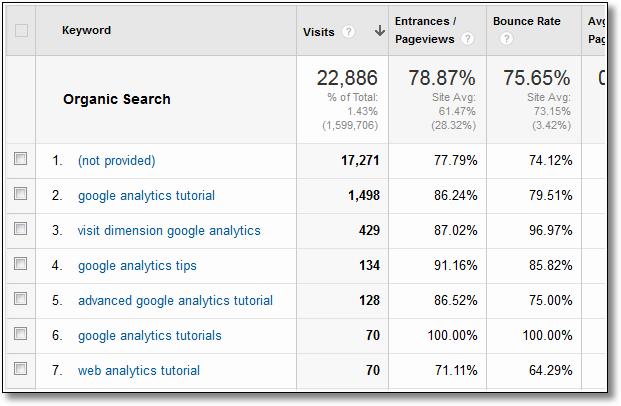

We have a lot of wonderful detailed data at a keyword level when we log into SiteCatalyst or WebTrends or Google Analytics. Bounce Rates, % New Visits, Visit Duration, Goal Conversions, Average Order Value.

All this data will no longer be available for organic search keywords.

As hinted above,

our ability to understand the long tail — often as much as 80% of search traffic — will be curtailed. We can guess our brand terms and product keywords, but the wonderful harvest of category-type, and beyond, keywords is gone.

Current keyword data is only temporarily helpful.

That is one reason the data we have for the last year or so, even as not provided ramped up, might only be temporarily helpful in our analysis.

Another important reason historical data becomes stale pretty quickly is that any nominally functioning business will have new products, new content, new business priorities, and all that impacts your search strategy.

Finally, with every change in the search engine interface the way people use search changes. This in turn mandates new SEO (and PPC) strategies, if we don't want to fail.

So, use the data you have today for a little while to guesstimate your SEO performance or optimize your website. But know that the view you have will become stale and provide a distorted view of reality pretty soon.

2. What Is Not Going Away. #silverlinings

While we are losing our ability to do detailed keyword analysis, we are retaining our ability to do strategic analysis. Search engine optimization continues to be important, and can still get a macro understanding of performance and identify potentially valuable keywords.

Aggregated search engine level analysis.

The Multi-Channel Funnels folder in Google Analytics contains the Top Conversion Paths report. At the highest level, across visits by focusing on unique people, the report shows the role search plays in driving conversions.

You can see how frequently it is the starting point for a later conversion, you can see how frequently it is in the middle, and you can see how frequently it is the last click.

I like starting with this report because it allows us to have a smarter beyond-the-last-click discussion and answer these questions: What is the complete role of Search in the conversion process? How does paid search interplay with organic search?

From there, jump to my personal favorite report in MCF, Assisted Conversions.

We can now look at organic and paid search differently, and we are able to see the complete value of both. We can see how often search is the last click prior to conversions, and how often it assists with other conversions.

The reason I love the above view is that for each channel, I'm able to present our management team a simple, yet powerful, understanding of the contribution of our marketing channels – including search.

Selfishly, now we can show the complete value, in dollars and cents, we deliver via SEO.

If you are interested in only the last-click view of activities (please don't be interested in this!), you can of course look at your normal Channels or All Traffic reports in Google Analytics.

This is a simple custom report I use to look at the aggregated view:

As the report above demonstrates, you can still report on your other metrics, like Unique Visitors, Bounce Rates, Per Visit Value and many others, at an aggregated level. You can see how Google is doing, and you can see how Google Paid and Organic are doing.

So from the perspective of reporting organic search performance to senior management, you are all set. Where we are out of luck is taking things down from here to the keyword level. Yes, there will still be some data in the keyword report, but since

not provided is an

unknown unknown, you have no idea what that segment represents.

Organic landing pages report.

Search engine optimization is all about pages and the content in those pages.

See Page Value there? Now you also know how much value is delivered when each of these pages is viewed by someone who came from organic search.

So let's say you spent the last few weeks optimizing pages #2, #3 and #5; well, now you can be sad that they are delivering the lowest page value from organic search. Feel sad.

Or, just tell your boss/client: "No, no, no, you misunderstood. I was optimizing page #4!" : )

The custom

landing pages report also includes the ability drill down to keyword level, just click on the page you are interested in and you'll see this:

With every passing day this drilldown will become more and more useless. But for now, it is there if you want to see it.

Let me repeat a point. I've noticed some of our peer SEOs making strong recommendations to take action based on the keywords you are able to see beyond not provided. I'm afraid that is a career-limiting move. You have no idea what these words represent – head, mid, tail, something else – or what is in the blank not provided bucket. Be very careful.

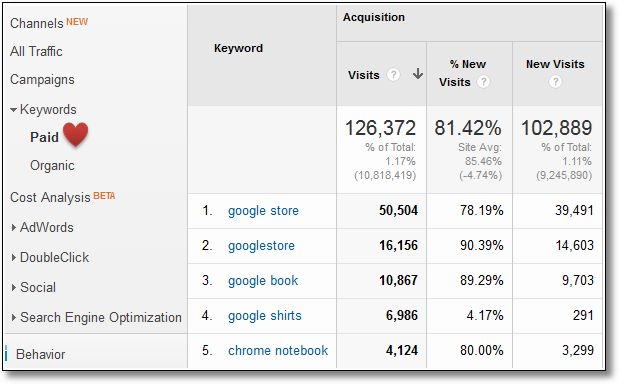

Paid search keyword analysis report.

We all of course still have access to keyword level analysis for our paid search spend.

There is one really interesting bit in the paid search reports that you can use for SEO purposes.

When you submit your keywords and bids, the search engine will match them against user search queries. In Google Analytics you have Keyword, in your AdWords report, as above, but if you create a custom report you can drill down from Keyword to Matched Search Query. The latter is what people actually type. So for "chrome notebook," above, if I look at the Matched Search Query I can see all 25 variations the users typed. This is very useful for SEO.

Beyond this, be judicious about what inferences you draw from your paid search performance. Some distinguished SEO experts have advocated that you should use the distribution of visits/conversions/profits of your AdWords keywords and use that to make decisions about effectiveness of your SEO efforts. Others are advising you to bid on AdWords and guesstimate various things about SEO performance. Sadly these are also career-limiting moves.

Why?

When you look at your AdWords data, you have no idea which of these four scenarios is true for your business:

And if you don't know which is true — and you really won't with not provided in your way — is it prudent to use your AdWords performance to judge SEO? I would humbly suggest not.

If you want to stress test this,…. go back to your 2011 (pre-not provided) data for paid and organic and see what you can find. And remember since then Google has made sixteen trillion changes to how both paid and organic search work, and your business has at least made 25.

Don't assume that your SEO strategy should reflect the prioritizations implied by your AdWords keyword data. The reason SEO worked so well is that you would get traffic you might not have known/guessed/realized you wanted/deserved.

3. Alternatives For Keyword Data Analysis.

With not provided eliminating almost all of our keyword data, initially for some search engines/browsers and likely soon from all, we face challenges in understanding performance. Luckily we can avail ourselves of a couple of alternative, if imperfect/incomplete, options.

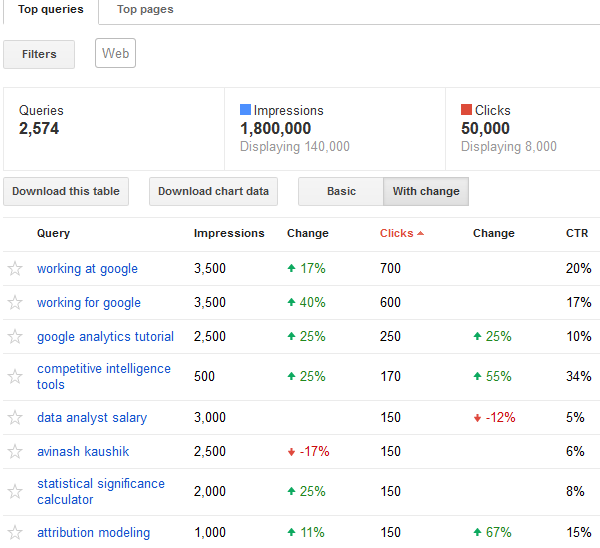

Webmaster Tools.

Here are the challenges

Google's Webmaster Tools solves: Which search queries does my website show up for, and what does my click-through rate look like?

I know this might sound depressing, but this is the only place you'll see any SEO performance data at a keyword level. Look at the CTR column. If you do lots of good SEO — you work on the page title, url, page excerpt, author image and all that wonderful stuff — this is where you can see whether that work is getting you more clicks. You work harder on SEO, you raise your rankings (remember don't focus on overall page rank, it is quite value-deficient), you'll see higher CTRs.

You will see approximately 2,000 search queries. These are not all the search queries for which your site shows up. (More on this in the bonus section below.)

There are a couple of important things to remember when you use this data.

If you go back in history and do comparative analysis for last year's data when

not provided was low, you'll notice that your top 100 keywords in Google Analytics or Site Catalyst are not quite the same as those in WebMaster Tools. They use two completely different

sources of data and data processing.

Be aware that even if you sort by Clicks (and always sort by clicks), the order in which these queries appear is not a true indication of their importance (in GA when I could see it, I would see a different top 25 as an example). The numbers are also soft or directional. For example, even with 90% not provided Google Analytics told me I had 500 visits from "avinash kaushik" and not 150 clicks as shown above.

Despite these two caveats, Webmaster Tools should be a key part of your SEO performance analysis.

It is my hope that if this is how search engines are comfortable sharing keyword level data, that over time they will invest resources in this tool to increase the number of keywords and improve the data processing algorithms

[Bonus

]1. Google's Webmaster Tools only stores your data for 90 days. If you would like to have this data for a longer time period, you can download it as a csv. Another alternative is to download it automatically using Python. Please see this post for instructions:

Automatically download webmaster tools search queries data

2. GWT only shows you data for approximately 2,000 queries which returned your site in search results. Hence it only displays a sub-set of your query behavior data. The impact of this is in the top part of the table above, Impressions and Clicks. During this time period my site received 1,800k Impressions in search results, but GWT is only showing data for 140k of those impressions because it is only displaying 2,574 user queries. Ditto for Clicks. If I download all the data for the 2k queries shown in GWT, that will show behavior for just 8,000 of the 50,000 clicks my site received from Google in this time period. Data for 42,000 clicks is not shown because those queries are beyond the 2k limit in GWT.

Update:

3. In his comment below Jeff Smith shares a tip on how to structure your GWT account to possibly expanding the dataset to get more information.

Please check it out.

Update:

4. Another great tip.

Kartik's comment highlights that you can link your GWT account with your AdWords account and get paid and organic click data for the same keyword right inside AdWords. Click to read a

how-to guide and available metrics.

[/Bonus

]

Google Keyword Planner.

The challenge

Google Keyword Planner solves: What keywords (user search queries) should my search engine optimization program focus on?

In the Keyword Planner you have several options to identify the most recent keywords — the most relevant keywords — to your website. The simplest way to start is to look for keyword recommendations for a specific keyword.

I choose the "search for new keyword and ad group ideas" section and in the landing page part type in the URL I'm interested in. Just as an example, I’m using the Macy's women's activewear page:

A quick click of the Get Ideas button gives us … the ideas!

I can choose to look at the Ad Group ideas or the Keyword Ideas.

There are several specific applications for this delightful data.

First, it tells me the keywords for which I should be optimizing this specific page. I can go and look at the words I'm focused on, see if I have all the ones recommended by the Keyword Planner, and if not, I can include them for the next round of search engine optimization efforts.

Second, I have some rough sense for how important each word is, as judged by Avg. Monthly Searches. The volume can help me prioritize which keywords to focus on first.

Third, if this is my website (and Macy's is not!), I can also see my Ad Impression Share. Knowing how often my ad shows up for each keyword helps me prioritize my search engine optimization efforts.

It would be difficult to do this analysis for all your website pages. I recommend the top 100 landing pages (check that the 100 include your top product pages and your top brand landing pages — if not, add them to the list).

With the advent of not provided we lost our ability to know which keywords we should focus on for which page; the Google Keyword Planner helps solve that problem to an extent.

You don't have to do your analysis just by landing pages. If you would like, you can have the tool give you data for specific keywords you are interested in. Beyond landing pages, my other favorite option is to use the Product Category to get data for a whole area of my business.

For example, suppose I'm assisting a non-profit hospital with its analytics and optimization efforts. I'll just drill down to the Health category, then the Health Care Service sub-category and finally the Hospitals & Health Clinics sub-sub-category:

Press Get Ideas button and — boom! — I have my keywords. In this case, I've further refined the list to only focus on a particular part of the US:

I have the keyword list I need to focus my search engine optimization efforts. Not based on what the Hospital CEO wants or what a random page analysis or your mom suggested, but rather based on what users in our geographic area are actually typing into a search engine!

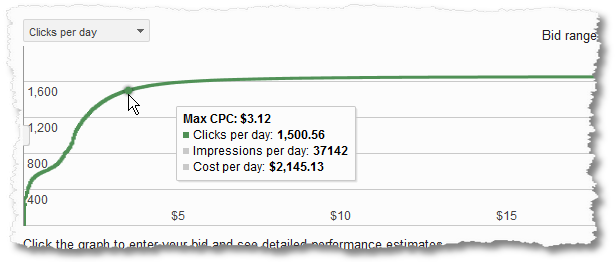

A quick note of caution: As you play with the Keyword Planner, you'll bump into a graph like this one for your selected keyword or ad group ideas. It shows Google's estimate of how many possible clicks you could get at a particular cost per click.

Other than giving you some sense for traffic, this is not a relevant graph. I include it here just to show you that it is out there and I don't want you to read too much into it.

Google Trends.

The challenge

Google Trends tool solves: What related and fastest-rising keywords should I focus on for my SEO program?

Webmaster tools focuses us on clicks and the Keyword Planner helps us with keywords to target by landing pages. Google Trends is valuable because it helps expand our keyword portfolio (top searches) and the keywords under which we should be lighting a fire (rising searches).

Here's an example. I'm running the SEO program for Liberty Mutual, Geico, AAA or State Farm. My most important query is car insurance (surprise!). I can create a report in Google Trends for the

query "car insurance" and look at the past 12 months of data for the United States.

The results are really valuable:

I can see which brand shows up at the top (sadly it’s not me, it’s Progressive), I can see the queries people are typing, and I can see the fastest-rising queries and realize I should worry about Safeco and Arbella. I can also see that Liberty Mutual's massive TV blitz is having an impact in increasing brand awareness and Geico seems to be having support problems with so many people looking for its phone number.

I can click on the gear icon at the top right and download a bunch more data, beyond the top ten. I can also focus on different countries, or just certain US states, or filter for the last 90 days.

I can also focus on different countries, or just some of the states in the US or only for the last 90 days. The options are endless.

There are two specific uses for this data.

First, I get the top and rising queries to consider for my SEO program. Not just queries either, but deeper insights like brand awareness, etc.

Second, I can use this to figure out the priorities for the content I need to create on my website to take advantage of evolving consumer interests and preferences.

If you have an ability to react quickly (not real-time, just quickly) the Google Trends tool can be a boon to your SEO efforts.

Competitive Intelligence / SEO Tools.

Competitive intelligence tools solve the challenge of knowing: What are my competitors up to? What is happening in my product/industry category when it comes to search?

SEO tools solve the challenge of knowing: What can I do to improve my page ranks, inbound links, content focus, social x, link text y, etc.?

There are many good competitive intelligence tools out there. They will continue to be useful for other analysis (referring domains, top pages, display ads, overall traffic etc.), but as I mentioned at the top of this post, the search keyword level data will attain a even lower quality. Here's a report I ran for L'Oreal:

If you see any keyword level data in these tools, you should assume that you are getting a distorted view of reality. Remember, all other data in these tools is fine. Just not any of the keyword level data.

There are many good SEO tools out there that provide a wide set of reports and data. As in the case of the CI tools, many other reports in these SEO tools will remain valuable but not the keyword level reports. As not provided moves toward 100% due to search switching to https, they will also lose their ability to monitor referring keywords (along with aforementioned repressive and sometimes not-so-overtly repressive regimes).

When the keywords are missing, the SEO tools will have to figure out if the recommendations they are making about "how to rank better with Bing/Google/Yahoo!" or "do a, b, c and you will get more keyword traffic" are still valid. At a search engine level they will remain valid, but at a keyword level they might become invalid very soon (if they’re not already)

Even at a search engine level, causality (in other words, “do x and y money will come to you”) will become tenuous and the tools might switch to correlations. That is hard and poses a whole new set of challenges.

Some of the analysis these tools start to provide might take on the spirit of: "We don't know whether factors m, n, and q that we are analyzing/recommending, or all this link analysis and link text and brand mentions and keyword density, specifically impact your search engine optimization/ranking at a keyword level, or if our recommendations move revenue, but we believe they do and so you should do them."

There is nothing earth-shatteringly wrong about it. It introduces a fudge factor, a risky variable. I just want you to be aware of it. And if you want to feel better about this, just think of how you make decisions about offline media – that is entirely based on faith!

Just be aware of the implications outlined above, and use the tools/recommendations wisely.

4. Possible Future Solutions.

Let's try to end on a hopeful note. Keyword data is almost all gone, what else could take its place in helping us understand the impact of our search engine optimization efforts? Just because the search engines are taking keywords away does not mean SEO is dead! If anything, it is even more important.

Here are a couple of ideas that come to my mind as future solutions/approaches. (Please add yours via comments below.)

Page "personality" analysis.

At the end of the day, what are we trying to do with SEO? We are simply trying to ensure that the content we have is crawled properly by search engines and that during that process the engines understand what our content stands for. We want the engines to understand our products, services, ideas, etc. and know that we are the perfect answer for a particular query.

I wonder if someone can create a tool that will crawl our site and tell us what the personality of each page represents. Some of this is manifested today as keyword density analysis (which is value-deficient, especially because search engines got over "density" nine hundred years ago). By personality, I mean what does the page stand for, what is the adjacent cluster of meaning that is around the page's purpose? Based on the words used, what attitude does the page reflect, and based on how others are talking about this page, what other meaning is being implied on a page?

If the

Linguistic Inquiry and Word Count (LIWC) can analyze my email and tell me the 32 dimensions of my personality, why can't someone do that for my site’s pages beyond a dumb keyword density analysis?

If I knew the personality of the page, I could optimize for that and then the rest is up to the search engine.

Crazy idea? Or crazy like a fox idea? : )

Non-individualized (not tied to visits/cookies/people) keyword performance data.

A lot of the concern related to privacy is valid, and even urgent when these search queries are tied to a person. The implications can be grim in many parts of the world.

But, I wonder if Yahoo!/Bing/Google/Yandex would be open to creating a solution that delivers non-individualized keyword level performance data.

I would not know that you, let's say Kim, came to my website on the keyword "avinash rocks so much it is pretty darn awesome" and you, Kim, converted delivering an order of $45. But the engines could tell us that the keyword "avinash rocks so much it is pretty darn awesome" delivered 100 visits of which 2% converted and delivered $xx,xxx revenue.

Think of it as turbo-charged webmaster tools – take what it has today and connect it to a conversion tracking tag. This protects user privacy, but gives me (and you) a better glimpse of performance and hence better focus for our organic search optimization efforts.

Maybe the search engines can just give us all keywords searched more than 100 times (to protect privacy even more). Still non-individualized.

I don't know the chances of this happening, but I wanted to propose a solution.

Controlled experimentation.

Why not give up on the tools/data and learn from our brothers and sisters in TV/Print/Billboards land and use

sophisticated controlled experiments to prove the value of our SEO efforts?

(Remember: Using the alternative data sources covered above, you already know which keywords to focus your efforts on.)

In the world of TV/Radio/Print we barely have any data – and what we do have is questionable – hence the smartest in the industry are using media mix modeling to determine the value delivered by an ad.

We can do the same now for our search optimization efforts.

First, we follow all the basic SEO best practices. Make sure our sites are crawlable (no javascript wrapped links, pop-ups with crazy code, Flash heavy gates, page tabs using magic to show up, etc.), the content is understandable (titles in images, unclear product names, crazy stuff), and you are super fantastically sure about what you are doing when you make every page dynamic and "personalized customized super-relevant" to each visitor. Now it does not matter what ranking algorithm the search engine is using, it understands you.

Now its time for the SEO Consultant's awesomely awesome SEO strategy implementation.

Try not to go whole hog. Pick a part of the site to unleash the awesomely awesome SEO strategy. One product line. One entire directory or content. A section of solutions. A cleanly isolatable cluster of pages/products/services/solutions/things.

Implement. Measure the impact (remember you can measure at a Search Engine and Organic/Paid level). If it’s a winner, roll the strategy out to other pages. If not, the SEO God you hired might only be a seo god.

At some level, exactly as in the case of TV/Radio/Print, this is deeply dissatisfying because it takes time, it requires your team to step up their analytical skills and often you only understand what is happening and not why. But, it is is something.

I genuinely believe the smartest SEOs out there will go back to school and massively upgrade their experimentation and media mix modeling skills. A path to more money via enriching skills and reducing reliance on having perfect data.

There is no doubt that secure search, and the delightful result not provided, creates a tough challenge for all Marketers and Analysts. But it is here, and I believe here to stay.

My effort in this post has been to show that things are not as dire as you might have imagined (see the not going away and alternatives sections). We can fill some gaps, we can still bring focus to our strategy. I'm also cautiously optimistic that there will be future solutions that we have not yet imagined that will address the void of keyword level performance analysis. And I know for a fact that many of us will embrace controlled experimentation and thereby rock more and charge more for our services or get promoted.

Carpe diem!

As always, it is your turn now.

I'm sure you have thoughts/questions on why

not provided happened. You might not have made it through all the five stages

Kubler-Ross model yet. That is OK, I respect your questions and your place in the model. Sadly I'm not in a position to answer your questions about that specifically. So, to the meat of the post …

Is there an implication of not having keyword level data that I missed covering? From the data we do have access to, search engine level, is there a particular type of analysis that is proving to be insightful? Are there other alternative data sources you have found to be of value? If you were the queen of the world and could create a future solution, what would it do?

Please share your feedback, incredible ideas, practical solutions and OMG you totally forgot that thingthoughts via comments.

Thank you.

PS: Here's my post on how to analyze keyword performance in a world where only a part of the data was in

not provided bucket:

Smarter Data Analysis of Google's https (not provided) change: 5 Steps. For all the reasons outlined in the above post this smarter data analysis option might not work any more. But if only a small part of your data, for any reason, is

not provided, please check out the link.

via Occam's Razor by Avinash Kaushik http://www.kaushik.net/avinash/secure-search-not-provided-keyword-analysis-data-sources/

In late 2011, Google announced an effort to make search behavior more secure. Logged-in users were switched to using httpS from http. This encrypted their search queries from any prying eyes, and kept from being passed on to websites the users visits after seeing search results.

In late 2011, Google announced an effort to make search behavior more secure. Logged-in users were switched to using httpS from http. This encrypted their search queries from any prying eyes, and kept from being passed on to websites the users visits after seeing search results.